Gunicorn Flask Timeout When Uploading a Big File

Timeouts on Heroku? Information technology'south Probably Yous

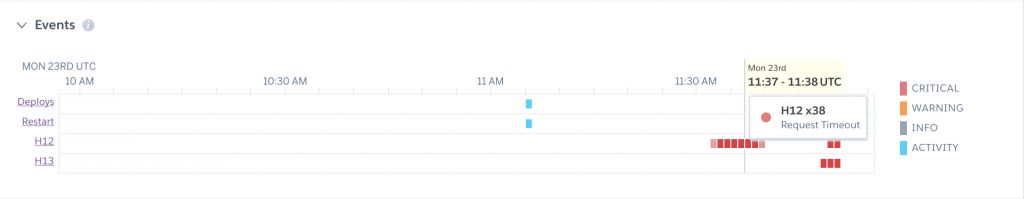

Have you e'er logged into Heroku to encounter a brusque stretch of asking failures, high response times, and H12 Request Timeout errors?

The first matter that comes to mind is 'something is wrong with Heroku!'. But you bank check their status page and at that place are no reported problems.

Trust me I have been there! Here is proof:

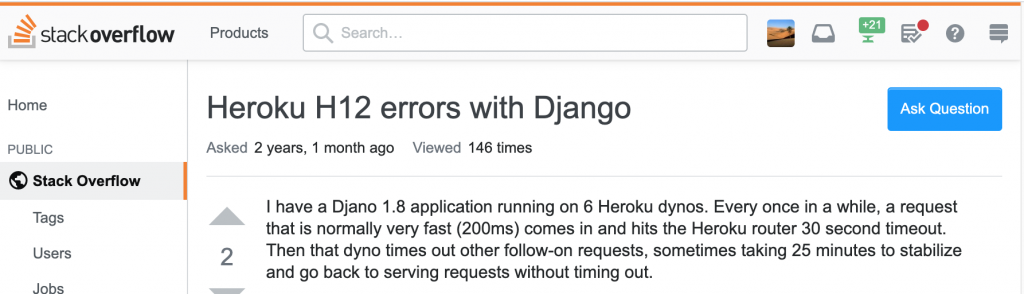

Unable to solve the problem, yous think 'it must be gremlins' and motion on. In this mail I want to show yous why it's probably not gremlins and it'due south probably not Heroku.

The issue is likely that you do not have proper timeouts set within your ain code. Allow'due south discuss the problem, do an experiment to demonstrate the issue, so offer some solutions.

Annotation: I will encompass the standard synchronous style in this post, and volition write about asyncrhonous (gevent) situations in another article.

The Problem

Your web application depends on different services, be it a PostgreSQL database, redis cache, or an external API. At some betoken ane or more of those services may irksome downwardly, either due to a temporary issue, or something of your ain design such a slow database query.

You may also experience slowdowns due to unexpected load on your web site. This can come up from bots that quickly overwhelm your app.

Without proper timeouts, these short slowdowns cascade into longer ones lasting beyond 30 seconds. If Heroku does non receive a response from your app within 30 seconds, it returns an error to the user and logs a H12 timeout error. This does non finish your spider web app from working on the asking.

Demonstration

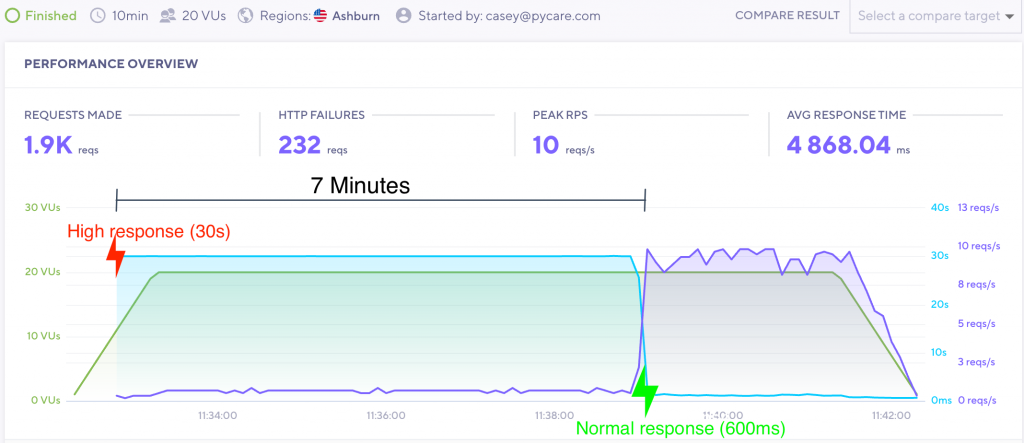

To demonstrate the trouble, I am going to create a Flask app that simulates a very tiresome API service. The app is served with gunicorn and logs each visit in redis via a counter. The kickoff 75 requests are slowed way down, taking 45 seconds to reply. The residual of the requests are processed in 600 milliseconds. Here is the script:

# Procfile web: gunicorn app:app # app.py import os import time from flask import Flask import redis app = Flask(__name__) r = redis.from_url(os.environ.become('REDIS_URL', 'redis://localhost:6379')) @app.route('/') def hello_world(): r.incr('counter', 1) if int(r.go('counter')) < 75: time.sleep(45) else: time.sleep(0.6) return 'Howdy Earth!' Permit's run this app with the wonderful K6 load testing tool for ten minutes.

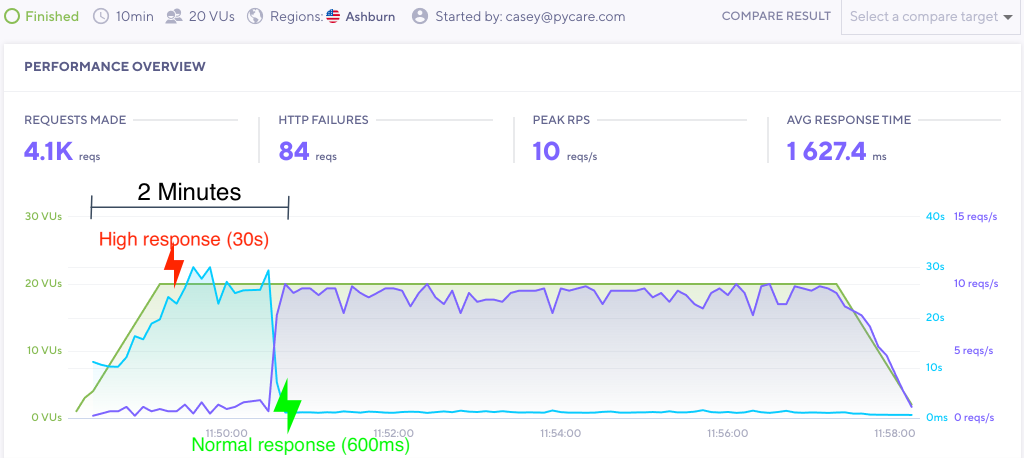

Focus on the blueish line, which is the overall response fourth dimension. The response time immediately goes to xxx seconds and stays there for 7 minutes! This is despite the slow request portion lasting merely two minutes. As you tin can run into, there is a rest effect from the tiresome response, in that the app is trying to procedure requests long after the incident is over.

To make matters worse, any slowdowns that occur about the end of that 7 minutes volition easily extend the fourth dimension in failure. And so an unstable service could make your app unusable for over an 60 minutes if the problem continues to reappear.

The Solution

Let'south run the scenario again just prepare a 10 second timeout in gunicorn, like this:

# Procfile web: gunicorn app:app --timeout 10

This timeout kills and restarts workers that are silent for however many seconds are set. Here is the aforementioned scenario with a 10 2nd timeout:

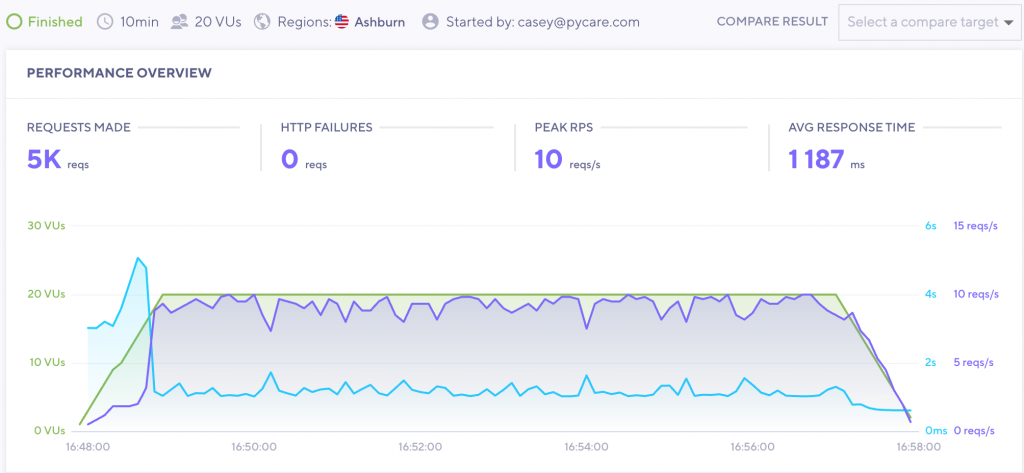

See how fast that recovery is? With a ten second timeout the response time gradually increases, merely goes back to normal virtually immediately once the problem goes abroad.

An Alternate Solution – Ready a Timeout on the API Asking

Now I know someone out there is proverb 'duh Casey, just ready a timeout when yous phone call the API!'. Well yous are not wrong. If you utilize requests to handle your API call, you tin can simply set a timeout on the API request like then:

import requests r = requests.become(url, timeout=3)

In this scenario our gunicorn timeout may non be needed, as requests volition throw an fault for whatever request that takes longer than 3 seconds. Let'southward attempt our scenario again with this timeout in identify:

The response time slowly climbs up to 5 seconds, but quickly resolves once the trouble goes away.

This approach provides another benefit, in that we can bank check our error logs and see what caused the problem. Rather than a generic Heroku timeout error, nosotros can run across that a specific API service timed out during a detail timeframe. We can even handle the error gracefully and display a message to our users while the API is unavailable:

import requests endeavour: requests.go(url, timeout=3) except requests.exceptions.Timeout: context['bulletin'] = 'This service is not available.'

We can set timeouts on other services every bit well, such as Elasticsearch:

ELASTICSEARCH={ 'default': { 'hosts': bone.environ.get('ELASTIC_ENDPOINT', 'localhost:9200'), 'http_auth': os.environ.become('ELASTIC_AUTH', ''), 'timeout': five, }, } The goal is that the lower-level service catches the timeout situation first, but the gunicorn timeout is there to take hold of the broader unexpected situations.

You Need Both

Although you can catch an API timeout with requests, heavy traffic and other situations can still drive your app to need the gunicorn timeout. And so it is important to implement both of these strategies.

Low Timeout Limitations

Timeouts are great and all, but sometimes setting a low timeout cuts off a critical capability of your app.

For example, if it takes 15 seconds to generate a page that is and then served via enshroud, setting a timeout of 10 seconds will eliminate your power to load that folio. Or maybe you lot need to upload files and that takes 20 seconds. So you cannot set a gunicorn timeout lower than 20 seconds.

The lesser line is that your gunicorn timeout is limited to the single function that takes the longest to run.

The solution is to refactor those parts of your app. You can do this by:

- Utilize of groundwork jobs to procedure long-running tasks

- Upload files directly to S3

- Amend slow database queries by calculation an alphabetize

- Caching via background jobs

Summary – Timeouts Brand Your App Stable and Resilient

And so where should you add timeouts? Everywhere! We demand service-level timeouts so we know when our service is having problems. We need gunicorn timeouts for unforeseen circumstances and and then that our app recovers quickly after being overloaded.

Remember, lowering timeouts is an iterative process. Optimize your app, then lower your timeouts. With proper timeouts in place your app will handle slowdowns and outages well, recovering very speedily while allowing developers to place the issues involved.

Source: https://pycare.com/timeouts-on-heroku-its-probably-you/

0 Response to "Gunicorn Flask Timeout When Uploading a Big File"

Post a Comment